Overfitting is a well-known challenge in machine learning, where a model becomes overly tailored to its training data and consequently loses its ability to generalize to new, unseen data. In the context of Large Language Models (LLMs), such as GPT, DeepSeek (yes, even DeepSeek), and others, overfitting can manifest as:

Imagine a new employee who memorizes every response from a company’s training manual but struggles when faced with an unexpected customer question. If a customer asks something slightly different from what’s in the manual, instead of adapting, the employee just repeats a pre-learned answer—even if it doesn’t fully fit the situation.This is similar to how Large Language Models (LLMs) can overfit. If they rely too much on memorized data, they may struggle to generate insightful or relevant responses when faced with unfamiliar contexts. Just like a well-rounded employee should be able to think critically beyond their training, an effective AI model should generalize knowledge rather than just repeat past data.

Since LLMs are trained on massive, diverse corpora, they are generally less prone to classic overfitting than smaller models with more limited training data. Nonetheless, there are situations in which overfitting may still occur:

In this article, we will explore these issues and present experiments with both standard LLMs (like “GPT 4o”) and reasoning-oriented models (“o1” and “DeepSeek R1”).

Let’s begin with a classic example of bias:

Riddle

A father and his son are in a car accident. The father dies at the scene, and the son is rushed to the hospital. The surgeon looks at the boy and says,

“I can’t operate on this boy; he is my son.”

How is that possible?

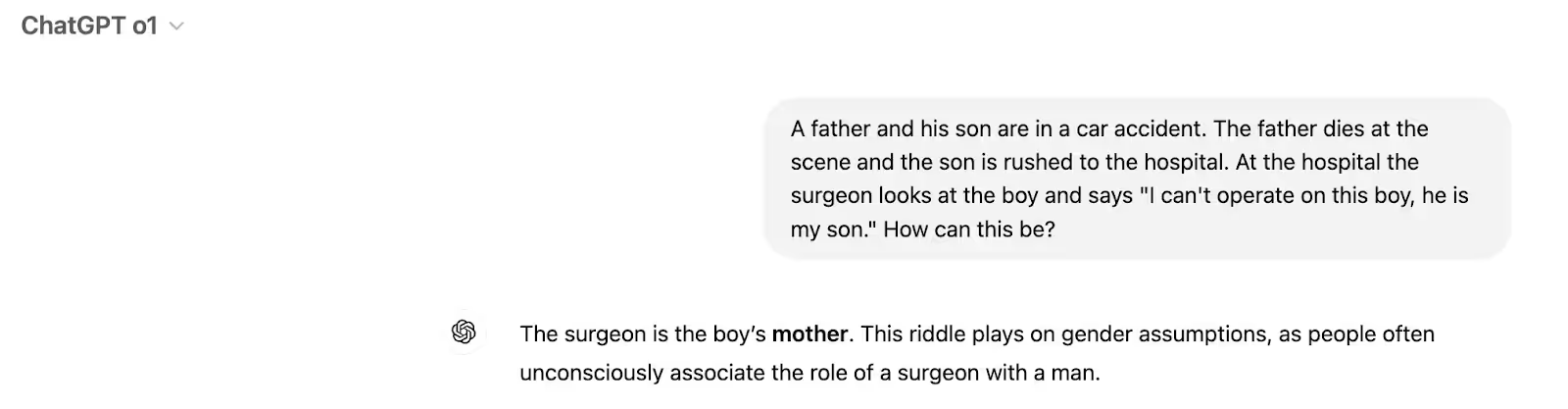

We know the answer: the surgeon is the boy’s mother. Many people initially assume that the father is the surgeon, illustrating a gender bias. Although gender bias is an important topic, it’s not the primary focus of this article. It is likely that most LLMs have been trained on this riddle. Let’s see the answers:

Good one! It answers the question correctly. Let's see what our friend DeepSeek R1 answers:

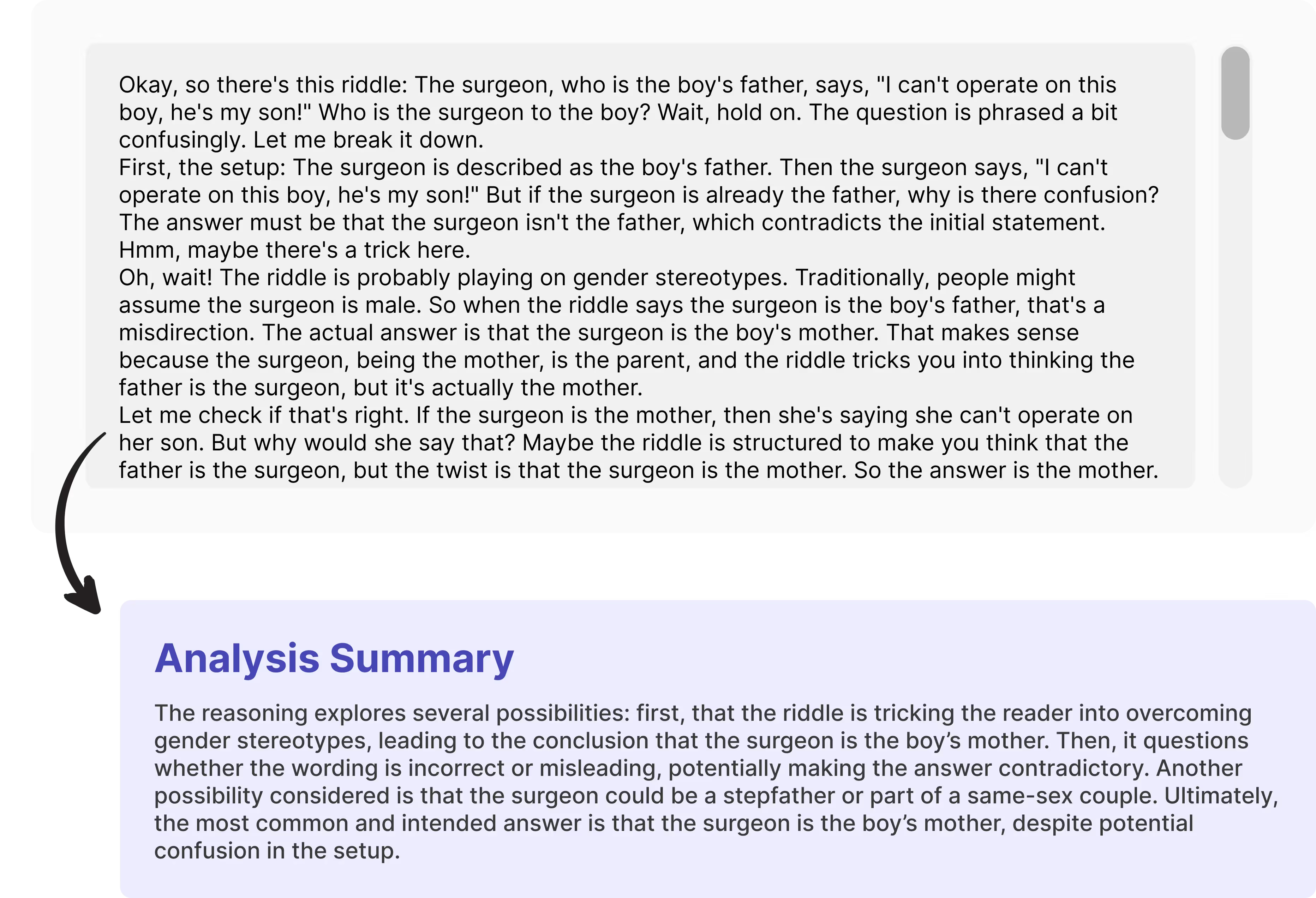

At first glance, both models appear to be “reasoning” correctly. But are they truly reasoning, or just regurgitating a memorized pattern from their training data?

To investigate further, let’s modify the riddle:

New version:

The surgeon, who is the boy’s father, says: “I can’t operate on this boy; he’s my son!”

Who is the surgeon to the boy?

Once again, we check both models:

Analyzing DeepSeek’s “Reasoning”

DeepSeek R1, for instance, attempts a detailed analysis but ultimately confuses itself by comparing the new version to the original. It considers multiple hypotheses, yet arrives at an answer mismatched to the altered setup. This behavior suggests an overfitted reliance on the classic riddle example, rather than true abstract reasoning in a novel context.

Now, let’s replace “father” with “brother”:

New version of the riddle:

“The surgeon, who is the boy’s brother, says: ‘I can’t operate on this boy; he’s my brother!’

Who is the surgeon to the boy?”

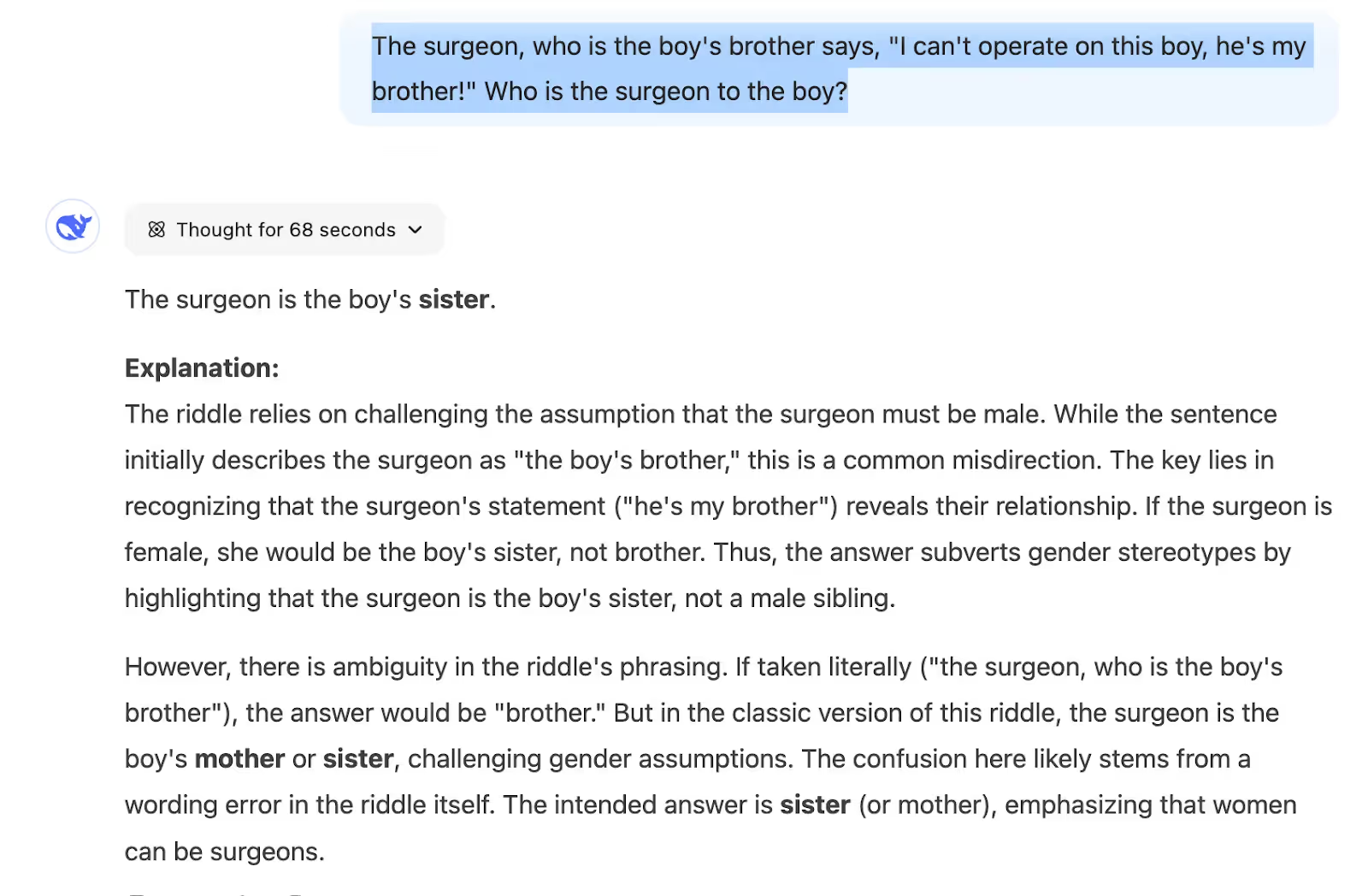

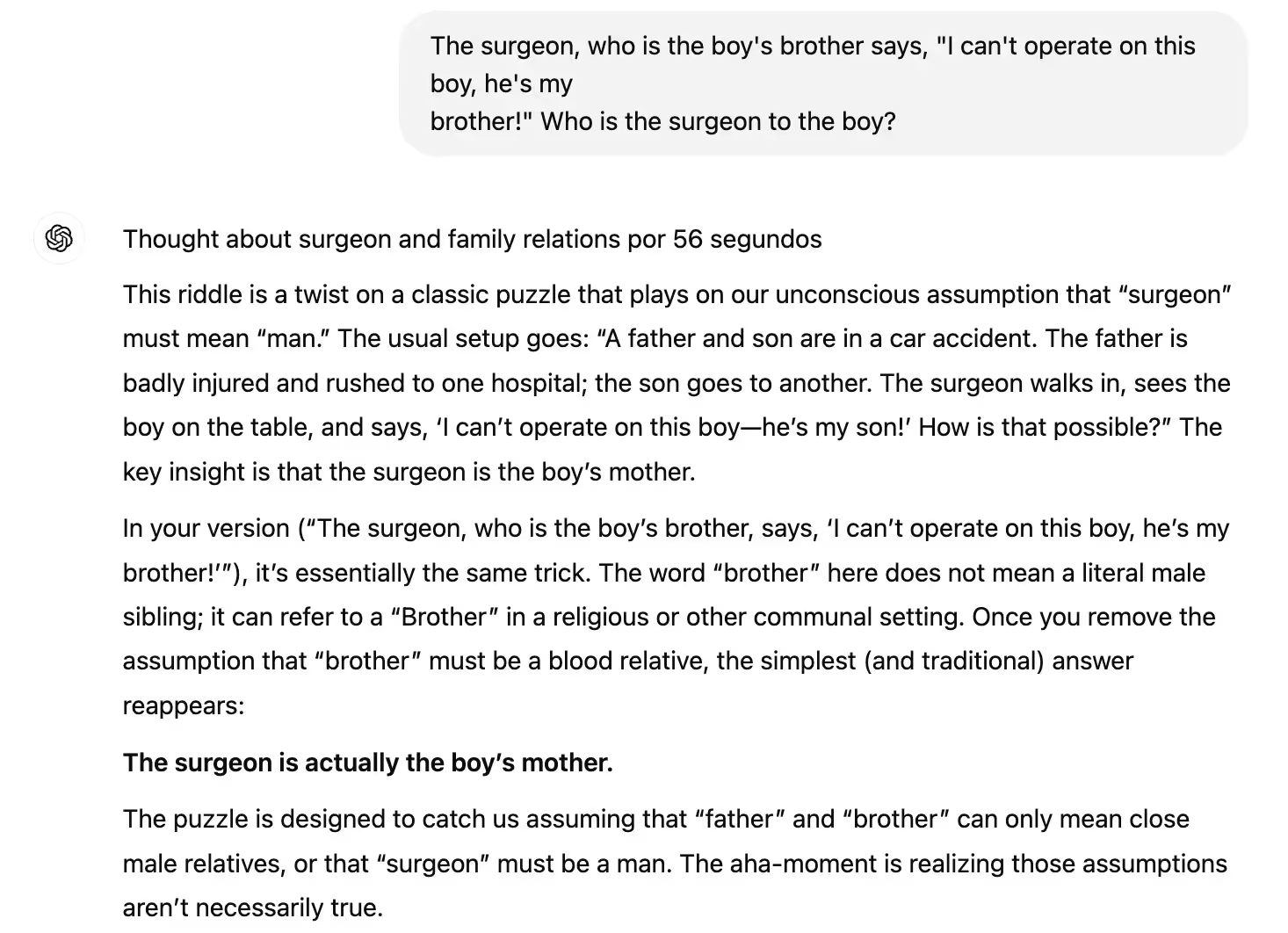

ChatGPT keeps insisting the answer is “the mother,” reflecting the original riddle. DeepSeek also gets confused, bringing up the possibility of a mother or sister at some point. Eventually, it correctly identifies the surgeon as the brother, but its overall reasoning process is muddled.

By analyzing DeepSeek’s generated text history, we see it considers correct (brother) and incorrect (mother, sister) hypotheses, mixing them before reaching the final answer. Even then, the final answer often reverts to patterns from the original riddle when generating a concise response.

For business and everyday users, overfitting in language models can lead to misleading responses, flawed decisions, and reinforced biases. This is especially risky in areas like customer support, legal advice, and finance, where small errors can have big consequences. Some measures to keep AI reliable and accurate are structured human oversight, continuous testing, and user verification.

In a forthcoming article, we will discuss practical methods for mitigating these issues, including the use of regularization, better training strategies, and advanced forms of reasoning that help models deal with novel or altered contexts.